Introduction

MultiBin is a tool that harnesses the new HRTF reverberation opcodes (hrtfearly and hrtfreverb, available in Csound 5.15) using the Csound API and Python. The primary goal of the application is to allow the audition of dynamic multi-channel scenarios using standard headphones. However, ultimately it was possible to design a more flexible tool, which can also be used as an interactive binaural environment. Users can essentially define a room, place and move sound sources in the room and move around the space. Future hardware extensions are also considered by including a head rotation parameter.

I. Background

The accompanying article (Hrtfearly and Hrtfreverb, New Opcodes for Binaural Spatialisation ) on the new HRTF reverb opcodes discusses the binaural paradigm, which is also dealt with in detail elsewhere [1],[3]. The process exploits the HRTF (head-related transfer function): a filter that is used to describe how sound is altered from source to auditory canal. HRTFs can be used in left and right ear pairs to artificially spatialise audio, exploiting sound localisation cues such as interaural differences and spectral alterations. Thus convincing artificial spatialisation can be achieved (optimally in headphones). MultiBin takes advantage of this process, as well as adding binaurally considered environmental or reverberation processing. Multiple sound sources can be accurately spatialised in headphones. The MultiBin paradigm exploits this potential by artificially spatialising each loudspeaker in a multi-channel setup, making virtual spatial loudspeaker setups possible. The tool is designed to be flexible and intuitive.

II. Application Use

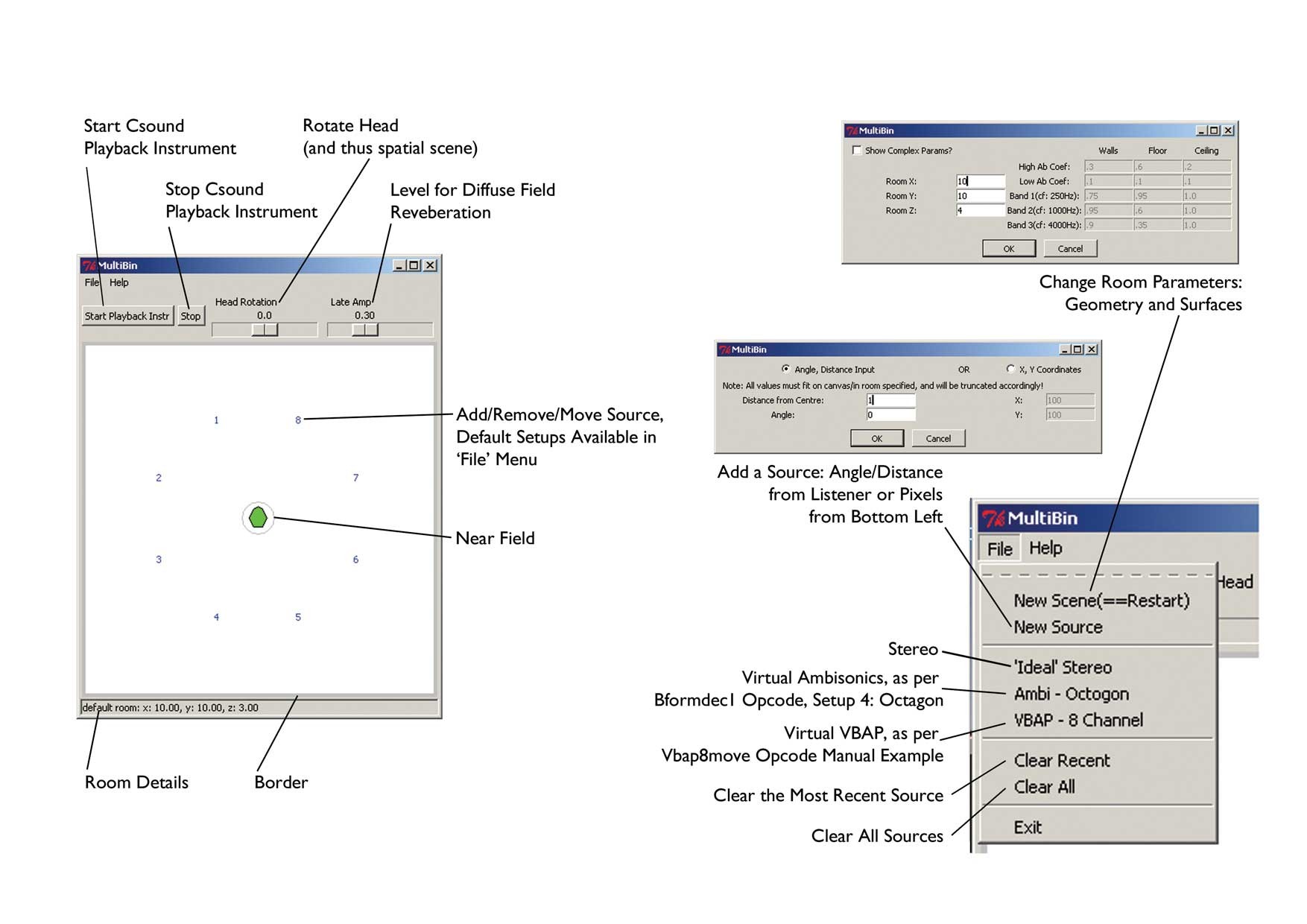

The image below (Figure 1) illustrates how to use MultiBin. The user sets up a scene to which sources can be added. As per the original goal of the application, default multi-channel setups are included: 8-channel Ambisonics, 8-channel VBAP (Vector Based Amplitude Panning), as well as stereo. Users can either add a default setup to a scene or add individual sources, placing them where required. When creating a new scene, the user must decide on the room dimensions, but they can also add frequency dependent detail on the absorption or reflection of each surface. Sources can be added using canvas location or actual distance or angle parameters. Once added, sources and user can be freely moved around the canvas and auditioned. Most recently added sources or all sources can be removed. The main processing window allows start or stop of processing, head rotation, level of diffuse field reverb (the reverb process is broken into early reflections and a later diffuse field, discussed in the accompanying article), as well as control of sources and listener location. Sources are limited to being inside the room and outside the listener's near field.

The application can be run by executing the python script MultiBin.py (your Csound installation must support Python). Users can define source audio by preparing a simple text file: instrument1.inc. This file should create or playback the audio to be used and is essentially a Csound instrument for playback. Audio should be patched to channels which are labeled as: in1, in2, shown below in the code employing the chnset opcode. Source 1 on the python GUI will listen for channel in1, source 2 on channel in2 etc. These channels thus refer directly to the numeric labels of sources on the canvas.

For example, a stereo file can be processed with:

a1, a2 soundin "stereo.wav" ; fade out... k1 linsegr 1,1,1,0.1,0 chnset a1*k1, "in1" chnset a2*k1, "in2"

This will imply that two sources will playback, where in1 is linked to source 1 on the canvas, and in2 is linked to source 2. An ambisonic file can be processed as shown below:

a1, a2, a3, a4, a5, a6, a7, a8 soundin "ambi.wav" ; fade out... k1 linsegr 1,1,1,0.1,0 chnset a1*k1, "in1" chnset a2*k1, "in2" chnset a3*k1, "in3" chnset a4*k1, "in4" chnset a5*k1, "in5" chnset a6*k1, "in6" chnset a7*k1, "in7" chnset a8*k1, "in8"

Mono files can also be loaded into tables and read, etc. Perhaps the best starting point is the attached instrument table.txt, which loads three short, varied samples into a table and loops them. Paste this text into instrument1.inc, run the script and add three new sources to the canvas (in desired locations) while or before the audio is playing back. Move the sources or listener to audition the spatial scene. Experiment with the diffuse field level and head rotation. Perhaps define a different room for use with the same files. Note that non-realistic movement speed may cause noise, due to the complexity of the processing algorithms involved. Python graphical interface controls to manipulate several parameters are shown below in Figure 1.

The stereo file, stereo.txt illustrates an interesting application, externalising the

included closely mixed pop song. Note that bringing down the later diffuse

field level (to approx .1) and taking some ring out of the room using the advanced

surfaces parameters illustrates the audition capabilities of the tool. Move

through the stereo field to audition the Precedence Effect, or phasing, etc.

Two .csd files which can be used to create a simple Ambisonic and a VBAP file are included. These audio files can then be used to audition sources circling the appropriate default multi-channel loudspeaker setups. Reducing the default diffuse field level is perhaps advisable again. Note that processing many sources with several reflections per source is processor intensive (for example 8-channel Ambisonics). If this becomes an issue with dynamic scenes, the user can alter the order of processing in the hrtfearly instrument in MultiBin.csd (reduce giorder from 1 to 0).

In summary, once audio is loaded as above, users can start the processing and click and drag the sources and listener to audition the scene. Head rotation can also be processed in real time, as well as control of the level of the diffuse field. In this way the power of the new HRTF opcodes is harnessed in an intuitive, immediate and useful fashion.

Files included in the examples folder are: the HRTF data files, the main MultiBin.csd and MultiBin.py. Clearly labeled text files for loading table based, Ambisonics, VBAP and stereo sources, audio samples for these files, and instrument1.inc to load the instrument and simple .csd files to create an Ambisonic and VBAP audio file are also included. You can also download the example files here: CartyMultiBinExs.zip

Figure 1: MultiBin usage

III. Code Detail

A detailed discussion of the code is beyond the scope of this article; an overview is perhaps more appropriate. Additional details can be found elsewhere [2],[3]. The Python code starts an instance of Csound and compiles a .csd, which listens for location messages from the main canvas. These messages, in turn, dictate the processing performed by Csound. The Python code deals with all GUI issues. Each new source on the canvas initialises an instance of the hrtfearly opcode. All audio is sent globally to an instance of the hrtfreverb opcode.

The main csd file, MultiBin.csd sets up all csound processing.

It is used to open the user defined input file, setup global channels for head

location, head rotation and late reverberation level. Each new source initialises

an instance of the hrtfearly opcode, the parameters of which are defined

in the Python code from the scene setup and source or listener location. All

audio is also processed by an instance of thehrtfreverb opcode. Portamento

is used to help smooth GUI control.

The user input file, instrument1.inc defines audio input, as described above.

IV: Uses

As discussed, multi-channel binaural audition is the primary application of MultiBin. For example, a user can setup a room, define absorption characteristics and audition an ideal stereo setup. They can move out of the sweet spot and around the room to experience non-ideal listening positions, the Precedence Effect, or phasing effects as they move. Additionally, non-sweet spot listening positions or dynamic listener positions can be auditioned in any multi-channel setup. For example, non-ideal listening can be compared in an Ambisonic and VBAP setup. Additionally, a situation whereby the physical constraints of a room imply non-ideal speaker locations can be auditioned. Users can also setup advanced speaker configurations or algorithms, such as Wave Field Synthesis.

The other main application is a more general binaural audition tool. Essentially, MultiBin thus serves as an interactive piece, with user definable audio input. Various dynamic sources can be placed around a listener, who can navigate the room.

It is hoped that the application encourages multi-channel composition and sound design. Working with multi-channel audio can be financially costly due to the costs of audio hardware and playback systems, as well as impractical. Headphone based flexible multi-channel audition removes these constrictions.

V: Conclusion and Future Possibilities

MultiBin offers flexible, dynamic multi-source/channel audition. Implementation was informed by a desired balance of accuracy and efficiency. Further accuracy in absorption filters, non-shoebox geometries and loudspeaker frequency responses could all be added. Furthermore, integration with a 3-dimensional graphics tool and head-tracking hardware could move the application further towards virtual reality. Spatialisation accuracy was however prioritised in this implementation. Spatially accurate direct sound and early reflections using high resolution HRTFs and flexible, frequency dependent binaural diffuse field reverb is the primary goal. However, expansion is possible and encouraged.

VI. Acknowledgements

This work was supported by the Irish Research Council for Science, Engineering and Technology: funded by the National Development Plan and NUI Maynooth. I would also like to thank Bill Gardner and Keith Martin for making their HRTF measurements available.

VII. References

[1]

B. Carty, "Brian Carty," 2011. [Online]. Available:

http://www.bmcarty.com/pubs.html. [Accessed Nov. 28, 2011].

Note: The above URL is no longer valid. The material is now accessible

at http://www.bmcarty.ie/pubs [accessed February 19, 2014]

[2] B. Carty, and V. Lazzarini, "MultiBin: A Binaural Audition tool," In Proceedings of the International Conference on Digital Audio Effects, Poster Session 2 (DAFx-10) September 6-10, 2010. [Online]. Available: http://dafx10.iem.at/proceedings/papers/LazzariniCarty_DAFx10_P19.pdf. [Accessed Dec. 12, 2011].

[3] B. Carty, "Movements in Binaural Space: Issues in HRTF Interpolation and Reverberation, with applications to Computer Music," NUI Maynooth ePrints and eTheses Archive, March 2011. [Online]. Available: http://eprints.nuim.ie/2580/. [Accessed Nov. 28, 2011].