Introduction

Computer musicians with experience in MIDI-based studio setupsmay find themselves lost when they first come to Csound.For some, Csound has the promise of doing nearly anything onewould want to do with sound, but when they dive into working withthe tool they quickly get frustrated trying to get past the initiallearning curve. The new user may not understand how to use Csound in ways that are familiar to them from their experiences in working withtools found in MIDI-based studios: synthesizers, effects,mixers, and parameter automations.

For this article, we will analyze and discuss a basicMIDI-based studio setup composed of instruments, effects, mixers,and automations. We will then discuss how to design a Csoundproject to achieve each of those features. An example Csound CSDproject file will be used to demonstrate a working implemention of the design.The reader is expected to have familiarity with MIDI-based studioenvironments and basic understanding of Csound.

Analysis of MIDI-based Studio Environments

Instruments and Mixer

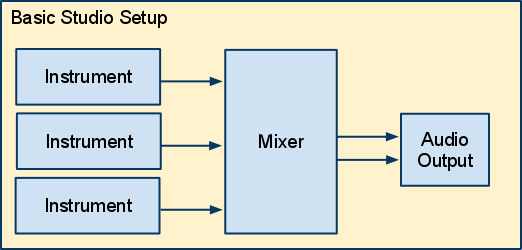

The following diagrams show the modules and signal flowin a basic MIDI-based studio environment. The first diagram:

Figure 1. Basic MIDI-studio Setup

is an overview picture of a studio. The diagram shows threeinstruments connected to a mixer that in turn mixes down to a stereo signal and outputs the final signal to an audio output (i.e. wave file, speakers).

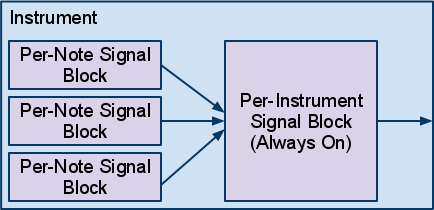

Figure 2. Internal View of an Instrument

Figure 2 shows that within a single instrument, instrumentsmay be further broken down into audio modules. Inelectronic and computer music, instruments are generally createdwith two types of signal blocks: one block that generates soundon a per-note basis, and one block that processes signals on aper instrument basis that is always-on. The per-note signal block thatgenerates sound may also include filters and other soundmodifying modules while the per-instrument signal block isgenerally composed of sound processing modules such as filters,reverb, and chorus. A MIDI instrument will take the audio signalsgenerated by the individual per-note signal blocks, sum them, andeither directly output the summed signal or further process thesignal in an always-on per-instrument signal block.

It is worth noting that MIDI-based instruments usually outputeither a mono or stereo signal. If the instrument outputs a monosignal, the single signal is often routed to a single inputchannel on a mixer and panning is controlled by the mixer,converting the mono signal into a stereo signal. If theinstrument outputs a stereo signal, the signal is often routed totwo input channels on a mixer and panning on the left signal ispanned hard left and the and right signal is panned hard right topreserve the stereo image output by the instrument.

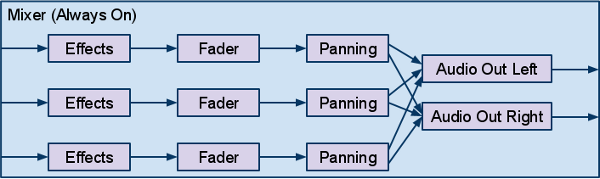

Figure 3. Internal View of a Mixer

Within a mixer, the incoming signals from instruments arerouted internally through individual channels. Each channel maypass the signal through different effects processing modules,apply panning, and adjust the signal level with a fader. InFigure 3, a simple mixer is shown where three incoming audiosignals are processed, mixed down to a stereo signal and finallyoutput. In more complicated mixer setups, the signals fromchannels may be mixed down to subchannels which can furtherprocess the signal before routing to the master outputchannel.

Effects

Effects are signal processing units which take in audiosignals, process them, and output the processed signal. Commoneffects include reverb, echo/delays, chorus, compression, and EQ. Like mixers and the always-onsignal block of instruments, effects are always-on. They will becontinously processing even if there is no signal coming into theeffects unit.

Effects may also be built to work with mono or stereo signals,where the number of channels in and out may or may not match. Thedesign of an effect may influence how the effect is placed in thesignal flow chain, i.e. whether to put the effect before or after the panningmodule.

Parameters and Automation

Instruments, mixers, and effects all have parameters that areconfigurable by users. Users will work with knobs, faders orother controls to manipulate these parameters. User may also usea sequencer to automate the values of these parameters overtime. Examples of parameters include filter cutoff frequency, delay time, and feedback amount.

Analysis

From the observations above, the following features areidentified as requirements that we will need to be able toimplement in our Csound project:

- Instruments

- Per-Note Signal Block - generates audio on a per-note basisand is bound in time by the length of the note

- Per-Instrument Signal Block - processes audio on aper-instrument basis and is always-on

- Mixer

- Fader - will scale the signal to make it louder orquieter

- Panning - will take a mono signal and output a stereo signal;the panning control will control where in the stereo field thesignal will be

- Effects - processes an audio signal; these are always-on andmay be mono or stereo

- Parameter Automation - allow controlling setting the valuesof a parameter over time

Now that we have looked at a basic studio setup and analyzedfeatures we want to implement, we will now move on toimplementing these features using Csound.

Implementation in Csound

In this section, we will look at implementing each of the fourprimary concepts mentioned in the previous section: instruments,a mixer, effects, and parameter automation.

The following example CSD shows the complete designimplemented in Csound:

; EMULATING MIDI-BASED STUDIOS - EXAMPLE; by Steven Yi; ; Written for issue 13 of the Csound Journal; http://www.csounds.com/csoundJournal/issue13;<CsoundSynthesizer><CsInstruments>sr=44100ksmps=1nchnls=20dbfs=1/* SIGNAL ROUTING */connect "Saw", "out", "SawBody", "in"connect "SawBody", "out", "Mixer", "in1"connect "Square", "out", "Mixer", "in2"/* ENABLE ALWAYS ON INSTRUMENTS */alwayson "SawBody"alwayson "Mixer"/* USER DEFINED OPCODES - These are used as Effects within the Mixer*/opcode FeedbackDelay, a,aiisetksmps 1ain, idelaytime, ifeedback xinafeedback init 0asig = ain + afeedbackafeedback delay asig * ifeedback, idelaytimexout ain + afeedbackendop/* INSTRUMENT DEFINITIONS */instr 1 ; Automation - set value Sparam = p4 ; name of parameter to controlival = p5 ; valuekcounter = 0if (kcounter == 0) thenchnset k(ival), Sparamturnoffendifendininstr 2 ; Automation instrumentSparam = p4 ; name of parameter to controlistart = p5 ; start valueiend = p6 ; end valueksig line istart, p3, iendchnset ksig, Sparamendin/* The per-note signal block of the Saw instrument */instr Saw ipch = cpspch(p4)iamp = p5kenv linsegr 0, .05, 1, 0.05, .9, .1, 0kenv = kenv * iamp aout vco2 kenv, ipchoutleta "out", aoutendin/* The always-on signal block of the Saw instrument */instr SawBodyaout inleta "in"kcutoff chnget "sawBodyCutoff"aout moogladder aout, kcutoff, .07 outleta "out", aoutendin/* The per-note signal block of the Square instrument */instr Squareipch = cpspch(p4)iamp = p5kenv linsegr 0, .05, 1, 0.05, .9, .1, 0kfiltenv linsegr 0, .05, 1, 0.05, .5, .1, 0aout vco2 1, ipch, 10aout lpf18 aout, ipch * 2 + (ipch * 8 * kfiltenv), 0.99, .8aout = aout * kenv * iamp outleta "out", aoutendin/* Mixer for the project */instr Mixerain1 inleta "in1"ain2 inleta "in2"/* reading in values for automatable parameters */kfader1 chnget "fader1"kfader2 chnget "fader2"kpan1 chnget "pan1"kpan2 chnget "pan2"; Applying feedback delay, fader, and panning for the Saw instrument ain1 FeedbackDelay ain1, .5, .4ain1 = ain1 * kfader1aLeft1, aRight1 pan2 ain1, kpan1; Applying fader and panning for the Square instrument ain2 = ain2 * kfader2aLeft2, aRight2 pan2 ain2, kpan2; Mixing signals from instruments together to "Master Channel"aLeft sum aLeft1, aLeft2aRight sum aRight1, aRight2; Global Reverb for all Instrumentsilevel = 0.7ifco = 3000aLeft, aRight reverbsc aLeft, aRight, ilevel, ifco; Output final audio signalouts aLeft, aRightendin</CsInstruments><CsScore>i "Saw" 0 .25 8.00 .5i. + . 8.02 .i. + . 8.03 .i. + . 8.05 .i "Saw" 4 .25 8.00 .5i. + . 8.02 .i. + . 8.03 .i. + . 8.05 .i "Square" 0 .25 6.00 .5i. 1 . 6.00 .5i. 2 . 6.00 .5i. 3 . 6.07 .5i. + . 6.07 .5i "Square" 4 .25 6.00 .5i. 5 . 6.00 .5i. 6 . 6.00 .5i. 7 . 5.07 .5i. + . 5.07 .5; Initializing values for automatable parametersi1 0 .1 "fader1" 1i1 0 .1 "pan1" 0i1 0 .1 "fader2" 1i1 0 .1 "pan2" .75i1 0 .1 "sawBodyCutoff" 1000; Setting values for automatable parametersi2 0 1 "sawBodyCutoff" 1000 8000i2 4 1 "sawBodyCutoff" . . i2 4 4 "fader1" 1 .5i2 4 1 "pan1" 0 1i2 0 4 "fader2" .4 1i2 4 4 "fader2" 1 .4; extend score so effects have time to fully processf0 10 </CsScore></CsoundSynthesizer>

For a Csound orchestra, users have two primary abstractionsavailable to them to use: Instruments and User-Defined Opcodes(UDO's). The coding of instruments and UDO's are primarily thesame though their usage is different: instruments can beinitiated by the Csound score as well as from the orchestra (byusing the event opcode), while UDO's are only callable within theorchestra by instrument or other UDO code. We will be using bothinstruments and UDO's to achieve emulating a MIDI-based studiosetup.

NOTE: To differentiate between the abstract concept of anInstrument that we are trying to achieve and a Csound instrument,the text from this point out will refer to Csound instruments(lower-case 'i') for Csound and Instruments (capital 'I') for theabstract conceptual instrument.

Implementing Instruments

To implement an Instrument, we will create two basic Csoundinstruments that will handle the per-note and per-instrumentaudio signal blocks of an Instrument. The Csound instrumentSaw represents the per-instrument audio signal code of theInstrument. Instances of this instrument will be created by thenotes written in our score. The Csound instrument SawBodyrepresents the always-on part of our Instrument. Since we wouldlike to have this be always running, we use the the alwaysonopcode in the instr 0 space of our Csound orchestra to keep theSawBody instrument always running for the duration of ourproject. (Note: The alwayson opcode will only turn an instrument on for as long as the total duration of the normal notes within a score. To extend the always-on instruments processing time to be longer than the score notes, which allows for extra time for Effects like delays and reverbs to process, an f0 statement is used in the score with a duration equal to the desired overall project duration.)

The example project also uses a Square instrument thatonly has a per-note audio signal block. This shows how anInstrument can route signals to the Mixer whether they haveper-instrument signal blocks or not. Also, by comparing the twoInstrument designs, we see that an expensive opcode likemoogladder can be instantiated once in the SawBodyinstrument and used to process all of the notes from theSaw instrument, while a unique instance of lpf18 isused in every note instance of Square. The decision to usea separate per-instrument block for the Saw Instrument waschosen as the filter was to be used in a global way, while thefilter in Square really needed to be used to shape thesound on each note. Looking at the designs of the two Instrumentsmay help to understand more clearly when you may want to use asingle Csound instrument or pair of instruments in your ownwork.

Routing Signals

To route signals between the per-note and per-instrumentsignal blocks of our Instrument, as well as to route signals fromthe Instruments to the Mixer instrument, the example project isusing the SignalFlow Graph Opcodes. (For more information on these opcodes,please see Michael Gogins' article Using the Signal Flow GraphOpcodes found within the same issue of the Csound Journal asthis article.) There are a number of other ways to route signals between instruments including global a-sigs, the zak family of opcodes, the Mixer opcodes, as well as using chnset and chnget. All of these methods work and can achieve the same end results, but the details of how they are used can make coding easier or more difficult depending on the context of your project. The Signal Flow Graph Opcodes were chosen for this example project for their ease of use and for using Strings to easily name and connect instrument signal ports. It is worth investigating the other methods to determine their strengths and weaknesses and evaluate what is best for your project.

Implementing a Mixer and Effects

To implement a Mixer and Effects, the example project uses asingle Csound instrument to contain all of the code for taking insignals from Instruments, applying a fader, passing signalsthrough Effects, and applying panning to the signal. The signalsare first brought into the instrument using the inleta opcode.The signals from the Instruments are then optionally sent throughEffects, implemented in the project using UDO's. Next, a faderis applied by multiplying a signal by a scaling factor andpanning is performed using the pan2 opcode. Finally, the signals are mixed together using the sum opcode andoutput to file or soundcard using the outs opcode.

By using a single Csound instrument to hold all of the mixer code, we gain the advantage of handling all of that code in a single place. Also, by using UDO's for Effects instead of separate instruments per Effect as is shown in the manual example for the Signal Flow Graph Opcodes, we are able to use multiple instances of the samae Effect in the same project, inserting it wherever we like within the mixer's internal signal flow graph. For example, while we only have one instance of the FeedbackDelay UDO Effect in the example project within the mixer, we could easily add another instance of the effect elsewhere, say after the signal input from the Square Instrument. The final note about a single instrument for the Mixer is that it simplifies having to only write one usage of the alwayson opcode for everything within the Mixer.

Implementing Parameters Automation

The last feature of the basic MIDI-based studio to implementin our project is parameter automation. To achieve this, theexample project uses the chnget and chnset opcodesto read and write values for our parameter. These opcodes werechosen as they allow using a String value to identify a parametername–such as "fader1" and "pan1"–which is easy to read andto remember what they mean.

The project was originally developed with hardcoded values forparameters, then later modified to replace the hardcoded valueswith calls to chnget to allow those values to beautomated. To set the parameter values, two special instruments werecreated: instr 1 which sets a parameter to a valueand then immediately turn itself off, and instr 2, whichtakes in a start and end value for the parameter and linearly changes the parameter value over time. The two specialinstruments allow for setting values of parameters from the scorein a generic way as the instruments take in a String name fromthe score for what parameter to modify. This design allows forthe two instruments to be used to affect any parameter used inthe entire project.

In the example project, parameters are exposed for automationin both the SawBody instrument as well as the Mixerinstrument. While the project only has sawBodyCutoff,fader1, fader2, pan1, and pan2 asautomatable, any value can be made to become automatable usingchnget with this design. At the end of the CsScoresection of the CSD, all of the notes dealing with the parametersare grouped together. The section starts of by initializing thestart values of the parameters using notes for instr 1,followed by linear automation of parameters over time with notesfor instr 2. Listening to the rendered output of theproject should audibly demonstrate the changing of thoseparameters over time.

Note: At the time of this article, Csound has a designlimitation for score notes to allow a maximum of one pfield thatcan be a String. Since we need to use a String for the parametername to make this work, numbered instruments are used. When thedesign limitation can be addressed in Csound's codebase and morethan one string can be allowed in a note, the special automationinstruments may then be used as named instruments.

Conclusion

MIDI-based studio setups offer users the ability to work withwell known tools of computer music: Instruments, Effects, Mixers,and parameter automation. Hopefully those who are familiar withthese sets of tools can now find a way to take their experienceand apply them to their Csound work. While the article has shownhow to implement a basic form of these tools, there are certainlymore advanced features from studios that can be implemented. Thereader is encouraged to take the knowledge from this article andcontinue to explore and develop their Csound setups, buildingmore complex parameter automation instruments (i.e. non-linearcurves, randomized jitter, oscillating values, etc), implementingsubchannels within their Mixers, as well exploring other signalrouting techniques like sends and sidechaining. Hopefully with time the reader will feel comfortable being able to apply theirknowledge and experience from MIDI-based studios to Csound as well discover all ofthe unique qualities of Csound to expand their musical horizons.